- #How to install spark on windows 7 how to

- #How to install spark on windows 7 code

- #How to install spark on windows 7 windows 7

- #How to install spark on windows 7 download

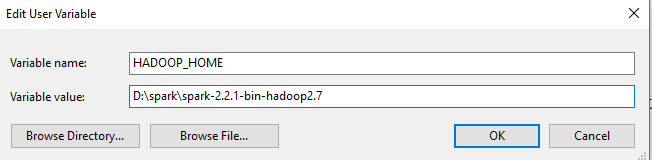

The variables to add are, in my example, Name You can find the environment variable settings by putting “environ…” in the search box. For example, D:\spark\spark-2.2.1-bin-hadoop2.7\bin\winutils.exeĪdd environment variables: the environment variables let Windows find where the files are when we start the PySpark kernel. Move the winutils.exe downloaded from step A3 to the \bin folder of Spark distribution. For example, I unpacked with 7zip from step A6 and put mine under D:\spark\spark-2.2.1-bin-hadoop2.7 tgz file from Spark distribution in item 1 by right-clicking on the file icon and select 7-zip > Extract Here.Īfter getting all the items in section A, let’s set up PySpark.

#How to install spark on windows 7 download

tgz file on Windows, you can download and install 7-zip on Windows to unpack the. I recommend getting the latest JDK (current version 9.0.1). If you don’t have Java or your Java version is 7.x or less, download and install Java from Oracle. You can find command prompt by searching cmd in the search box. The findspark Python module, which can be installed by running python -m pip install findspark either in Windows command prompt or Git bash if Python is installed in item 2. Go to the corresponding Hadoop version in the Spark distribution and find winutils.exe under /bin. Winutils.exe - a Hadoop binary for Windows - from Steve Loughran’s GitHub repo. You can get both by installing the Python 3.x version of Anaconda distribution.

#How to install spark on windows 7 windows 7

I’ve tested this guide on a dozen Windows 7 and 10 PCs in different languages.

#How to install spark on windows 7 how to

In this post, I will show you how to install and run PySpark locally in Jupyter Notebook on Windows.

#How to install spark on windows 7 code

= ] Changing Configuration PropertyĬreate a hive-site.When I write PySpark code, I use Jupyter notebook to test my code before submitting a job on the cluster. You should now be able to run Spark applications on your Windows. If you see the above output, you're done.

The URL "file:/C:/spark-2.0.2-bin-hadoop2.7/bin/./jars/datanucleus-rdbms-3.2.9.jar" isĪlready registered, and you are trying to register an identical plugin located at URL "file:/C:/spark-2.0.2-bin. Versions of the same plugin in the classpath. Registered, and you are trying to register an identical plugin located at URL "file:/C:/spark-2.0.2-bin. The URL "file:/C:/spark-2.0.2-bin-hadoop2.7/jars/datanucleus-api-jdo-3.2.6.jar" is already The URL "file:/C:/spark-2.0.2-bin-hadoop2.7/jars/datanucleus-core-3.2.10.jar" is already registered,Īnd you are trying to register an identical plugin located at URL "file:/C:/spark-2.0.2-bin-hadoop2.7/bin/./jars/datanucleus-core-ġ6/12/26 22:05:41 WARN General: Plugin (Bundle) "" is already registered. Ensure you dont have multiple JAR versions of

Set HADOOP_HOME to reflect the directory with winutils.exe (without bin).ġ6/12/26 22:05:41 WARN General: Plugin (Bundle) "org.datanucleus" is already registered. Save winutils.exe binary to a directory of your choice, e.g. NOTE: You should select the version of Hadoop the Spark distribution was compiled with, e.g. ¶ĭownload winutils.exe binary from repository. Read the official document in Microsoft TechNet - ++ (v=ws.10).aspx++. using Run as administrator option while executing cmd. All the following commands must be executed in a command-line window ( cmd) ran as Administrator, i.e.

You need to have Administrator rights on your laptop. Java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries.Īt .Shell.getQualifiedBinPath(Shell.java:379)Īt .Shell.getWinUtilsPath(Shell.java:394)Īt .Shell.(Shell.java:387)Īt .conf.HiveConf$ConfVars.findHadoopBinary(HiveConf.java:2327)Īt .conf.HiveConf$ConfVars.(HiveConf.java:365)Īt .conf.HiveConf.(HiveConf.java:105)Īt 0(Native Method)Īt (Class.java:348)Īt .Utils$.classForName(Utils.scala:228)Īt .SparkSession$.hiveClassesArePresent(SparkSession.scala:963)Īt .Main$.createSparkSession(Main.scala:91) 16/12/26 21:34:11 ERROR Shell: Failed to locate the winutils binary in the hadoop binary path

0 kommentar(er)

0 kommentar(er)